Basic Concepts

What is Ori Global Cloud

Ori Global Cloud (OGC) is a comprehensive end-to-end solution provider in the cloud computing space, uniquely focused on Machine Learning (ML) and Artificial Intelligence (AI). OGC stands out in the market by offering a seamless integration of advanced infrastructure services with high-value ML and AI solutions, catering to the complete spectrum of needs in this dynamic field.

End-to-End ML/AI Solutions

Comprehensive Infrastructure Services

At the heart of OGC's offerings are robust and scalable infrastructure services, designed to support the demanding requirements of ML and AI applications. These services provide the foundational elements necessary for high-performance computing, including specialized GPU resources and tailored Kubernetes clusters. OGC's infrastructure is engineered to handle complex computations and large-scale data processing, ensuring that the hardware and software needs for ML and AI projects are met with precision and efficiency.

High-Value ML and AI Services

Beyond infrastructure, OGC excels in delivering high-value ML and AI services. This includes ML VMaaS, offering ready-to-use virtual machines pre-installed with essential ML tools and frameworks, and a Kubernetes-based service integrating Kubeflow, providing a robust platform for managing ML workflows. These services are designed to streamline the ML lifecycle, from data processing and model development to training, inference, and deployment.

Simplifying ML Operations

OGC simplifies the complexities of ML operations (MLOps), enabling businesses and developers to focus on innovation rather than infrastructure management. By offering automated machine learning (AutoML) and model optimization tools, OGC facilitates the development of high-quality ML models with minimal coding. This approach democratizes access to AI technologies, allowing a broader range of users to participate in the AI revolution.

Collaborative and Scalable Environment

OGC fosters a collaborative and scalable environment, supporting shared workspaces and integrated development environments. This collaborative ecosystem is crucial for teams to develop, test, and deploy ML models effectively and efficiently, ensuring that projects are not only technically sound but also aligned with business objectives.

OGC Core Components

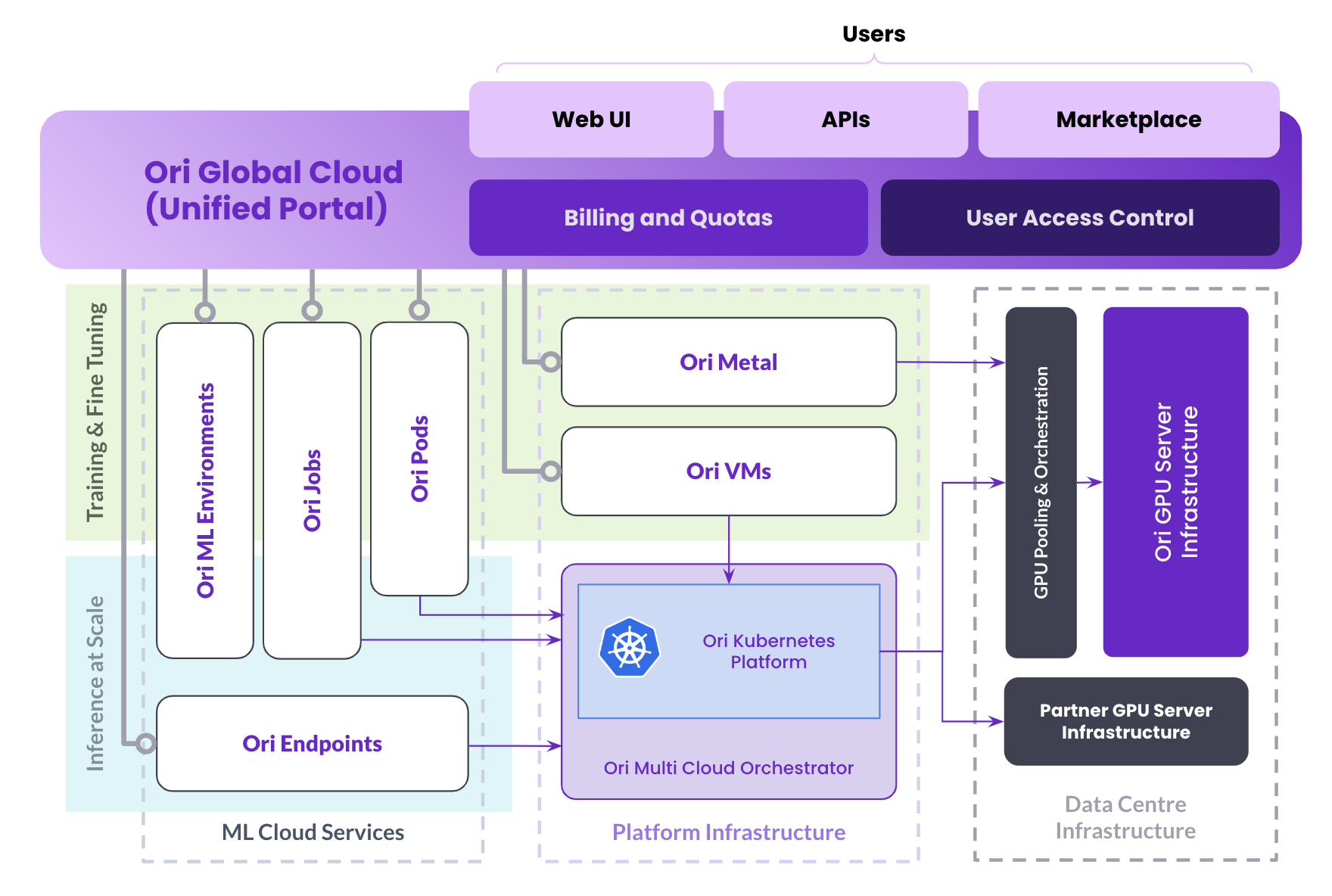

The image above is a high-level architecture diagram of the Ori Global Cloud Platform. It outlines the various components of the platform and their interactions, designed to serve users engaging with ML/AI applications.

At the top, we have the "Users" layer, which is the entry point and includes three main interfaces:

- Web UI: A graphical user interface for interacting with the platform's services.

- APIs: Application Programming Interfaces that allow for programmatic access and control over the services.

- Marketplace: A hub where users can find and use additional services or applications.

Below this, there are services related to user management:

- Billing and Quotas: This component manages the financial transactions and usage limits of users.

- User Access Control: This system controls user permissions and access to various services within the platform.

The core of the platform is divided into several main services:

- Ori ML Environments: Dedicated environments for training and fine-tuning ML models.

- Ori Jobs: A service for executing and managing computational jobs.

- Ori Pods: Containerized applications or services running on the platform.

- Ori Endpoints: Interfaces for ML cloud services that could be used for inference at scale.

Additionally, we have infrastructure-related services:

- Ori Metal: Direct access to physical hardware resources, likely for performance-intensive tasks.

- Ori VMs: Virtual Machines that users can provision and use according to their needs.

At the bottom of the diagram, central management services are shown:

- Ori Kubernetes Platform: The managed Kubernetes service for orchestrating containerized applications.

- Ori Multi Cloud Orchestrator: A tool for managing resources across multiple cloud providers, ensuring optimal performance and resource utilization.

Finally, on the right side, we have the computational and orchestration infrastructure broken down into two parts:

- Partner GPU Server Infrastructure: High-performance GPU servers from partner services integrated into the platform.

- Data Centre Infrastructure: The physical data centers where the platform's resources and services are hosted.

This architecture is designed to offer a unified portal where users can access a wide range of ML/AI services, orchestrate workloads across multiple clouds, and leverage GPU acceleration, all within a managed and secure environment.

Learn more about OGC. Start with Basic Concepts, or follow our step-by-step Quick Start Guide.

User Sign-up

The user onboarding process for OGC is designed to be simple and straightforward. The first step is to sign up for an account on the OGC platform. This can be done by visiting the OGC website and filling out a registration form. When the user signs-up up to OGC, for the first time, they will have an Owner (role) within their Organisation, providing them administrative privileges. The user will be given access to the OGC documentation and tutorials, so they can become familiar with the platform's features and functionality. Once the user is familiar with the platform, they can start onboarding clusters and packaging and deploying their applications using OGC's intuitive user interface and powerful orchestration features.

User Access Management

OGC's user access management feature provides a flexible and secure way to manage access to the platform, ensuring that only authorized users have access to the resources they need to perform their tasks, while maintaining compliance and security requirements. The platform provides granular access controls that can be used to restrict access to specific features based on the role of the user. This feature provides the ability to create users and assigning them different roles with varying levels of access and permissions, such as read-only or full administrative access. You will assign user roles, Owner, Editor or Viewer at the time of user creation. Once a user is created on the platform they will receive an invitation to join. Users are authenticated via their email and password and access can be revoked at any time by the administrator.

Please read our step-by-step Quickstart guide on Organisations to learn more about how to add users to your organisation and the roles to set the right permissions.

Ori Inference Orchestration

The Ori Global Cloud (OGC) Inference Orchestration introduces several new core concepts that enable users to interact with AI/ML services in a powerful way. These concepts include:

- Infrastructure Clusters: OGC allows users to onboard and manage Kubernetes clusters within the platform, providing a centralized location to manage and deploy their applications and services.

- Projects: Users can create and manage projects within the platform, allowing them to organize and manage their applications and services.

- Packages: Users can package their applications as self-contained, portable units, making it easy to deploy and orchestrate them across different cloud environments.

- Deployments: Users can deploy their packaged applications on Kubernetes clusters across different cloud environments, including popular platforms such as AWS, Azure, and Google Cloud.

Overall, these core concepts of infrastructure clusters, projects, packages, and deployments provide a framework for users to manage and automate their cloud-based applications and services using the OGC platform. By leveraging these core concepts, users can easily manage and deploy their applications and services in a scalable and efficient manner, reducing complexity and improving overall productivity.

Clusters

A Cluster is your Ori entity that encapsulates all the information that OGC needs about remote compute clusters owned by your organisation. By interacting with the Clusters associated with your organisation, you and your team can perform the required fleet management tasks.

Currently, only Kubernetes (K8s) clusters are supported by OGC, and so most of the Cluster properties and associations map fairly directly to Kubernetes constructs.

If you're looking to onboard your organisation's K8 cluster to the Ori platform, the process is simple and straightforward. With just a few clicks, remote access is enabled and you can begin leveraging the platform's features for fleet management and resource management of your cluster. Ultimately, onboarding your own cluster with Ori makes managing K8s deployments simpler and more efficient than ever before.

Learn how Infrastructure-Provisioning works on OGC.

Projects

A project entity is an essential part of OGC because it enables you to create projects, manage packages and application deployment. The project allows you store resources specific and related to the project, such as packages, application deployment configurations, and user-defined secrets. Its also allows you to configure and access images from registries like Docker Hub and private Registries with ease.

A project allows you to have greater control over your packages and its associated resources.

To create a new Project follow our guide on Projects.

Packages

A package is a way to deploy applications on the Ori platform. It offers an explicit declaration of all the information required to deploy and manage a modern, cloud-native “application” across multiple clusters. A package encapsulates all the necessary applications, route policies, environment variables, and mounts necessary for a containerized application to run. It enables users to quickly and easily deploy applications without having to think about every single detail related to running an application or service in a containerized environment.

Selectors

Selectors are key-value pair included in a Workload definition and used by the Orchestrator to determine where a Workload can be deployed during Planning. Selectors are matched to Labels on Nodes to determine whether specific Nodes are suitable for running specific Workloads.

By simplifying the deployment process of applications on the Ori platform, using packages eliminates manual configuration and helps you save considerable time and effort.

View our guide on Packages to understand how packages are created, configured and deployed on OGC.

Containers

Containers allow you to configure the image, registry, commands and arguments, as well as the resources required to run a container. Additionally you can specify Env Vars and Secrets for container runtime configuration. Finally you can specify ports with port mapping capabilities to expose your container on the network.

Deployments

Deployments on OGC encapsulate all the information about a single deployment of a Package. It involves deploying applications across K8 clusters matching the policies to handle workloads with ease. Deployment is organised into three parts: plans, statuses and workloads. During a deployment it is essential to keep track of all three elements to make sure that packages are correctly configured.

Deployment Planning state is a vital preliminary state before the deployment transitions to other states. During planning, the OGC orchestrator evaluates the capabilities of available clusters against the requirements of a Deployment to produce a Plan.

Deployment Plan

A deployment plan is a set of information required to instantiate a Deployment into a Cluster. It includes definitions and locations of container instances, supporting services, VPN connections etc. which will need creation and configuration. A Plan is transient, and is discarded when a Deployment is and the Running state.

Workloads

In OGC, workloads are referred to as running items within a Deployment.

View OGC's quick start guide to learn how Deployments can be managed on OGC.