Overview

Welcome

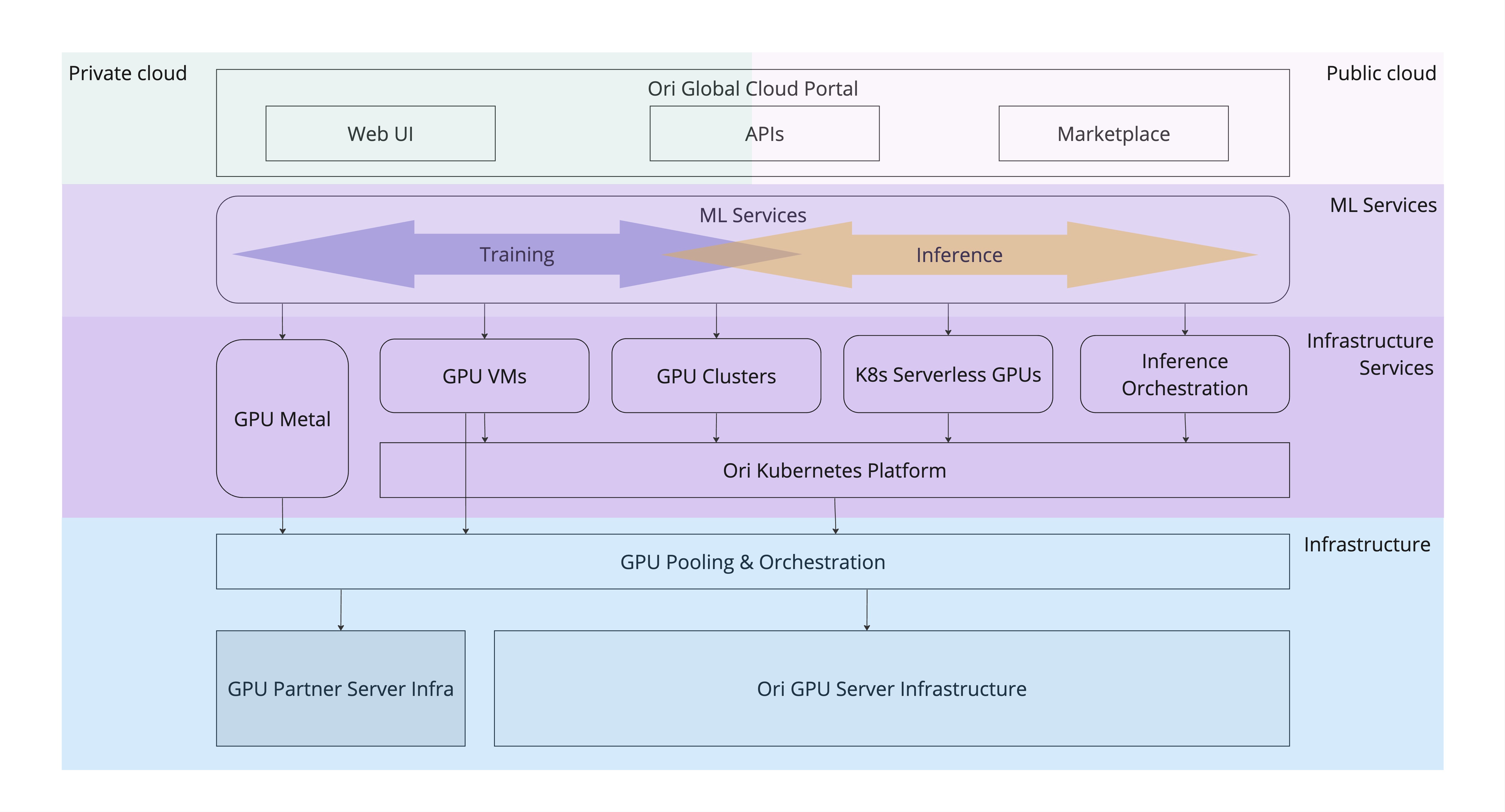

Welcome to Ori Global Cloud (OGC) documentation! OGC is the End-to-end AI/ML Cloud covering all your needs, specializing in GPU resources and services tailored for Machine Learning (ML) and Artificial Intelligence (AI) applications. We are dedicated to empowering developers, data scientists, and organizations with our advanced cloud solutions, to revolutionise the world.

Our Services

OGC offers a suite of services designed to meet the unique requirements of ML and AI, providing scalable, efficient, and robust cloud infrastructure:

Ori GPU Metal: Offering reserved compute power for ML training needs and other intensive uses, our GPU Baremetal Service provides direct access to physical GPU hardware. This service is ideal for scenarios requiring dedicated, high-performance computing resources without the overhead of virtualization. It ensures maximum performance and control, making it suitable for the most demanding ML training tasks, high-performance computing (HPC), and situations where data sovereignty and regulatory compliance are critical.

Ori GPU VMs (Virtual Machines): Specially designed for ML and AI, these virtual machines feature high-performance GPUs perfect for handling complex ML workloads, enabling rapid data processing and deep learning training. Our GPU VMaaS is tailored specifically for ML and AI applications, featuring state-of-the-art GPUs. These virtual machines are not only powerful but also finely tuned to handle complex and intensive ML workloads. They provide the computational horsepower necessary for high-speed data processing, deep learning training, and advanced analytics. With our GPU VMaaS, you can expect accelerated model training times, real-time inference capabilities, and overall enhanced performance for all your AI-driven projects.

Ori GPU Serverless GPUs: This service provides high-performance GPU resources in a serverless framework, ideal for demanding AI/ML tasks like model training and real-time inference. Our GPU Serverless Kubernetes service revolutionizes the way you run AI/ML workloads. By integrating high-performance GPU resources, this service enables efficient and scalable deployment of AI-driven applications. It takes away the hassle of managing infrastructure, allowing you to focus solely on building and optimizing your ML models. With GPU Serverless K8s, you benefit from automated resource scaling, ensuring that GPU usage is maximized for cost-effectiveness and performance. This service is ideal for scenarios demanding rapid, on-demand GPU resources for tasks like model training and inference, offering an agile and efficient environment for your AI/ML endeavors.

Ori GPU Clusters: Specifically tailored for AI/ML applications, these clusters integrate powerful GPUs to efficiently manage demanding AI workloads and simplify the deployment of complex AI projects. Our GPU Kubernetes Clusters service is specifically tailored for running AI/ML applications. These clusters are equipped with powerful GPUs to handle the most demanding AI workloads, from deep learning model training to real-time data analytics. With this service, you get the scalability and flexibility of Kubernetes combined with the raw power of GPU computing. This ensures that your AI projects are not only efficiently executed but also scalable and manageable, regardless of their complexity or size. The service simplifies the deployment and management of AI workloads, enabling you to focus on innovation and development, while we handle the underlying infrastructure complexities.

Ori ML Inference Orchestration: Execute ML inference consistently across multiple cloud platforms, leveraging the strengths of diverse cloud environments. This is an orchestration service for executing ML inference across distributed computing environments. It allows you to leverage the combined power of multiple cloud platforms, ensuring high availability, redundancy, and geographic distribution of your inference workloads. The service is designed to optimize resource utilization, reduce latency by bringing computation closer to data sources, and offer greater flexibility in scaling. By distributing inference tasks across various clouds, you can achieve more efficient load balancing and better manage peak demand periods, ensuring consistent and reliable performance.

Ori ML Services: Ori Global Cloud's ML as a Service (MLaaS) is a comprehensive offering designed for businesses and individuals who aim to swiftly implement and scale their Machine Learning (ML) and Artificial Intelligence (AI) initiatives. This service is tailored to enable users to get up and running quickly, eliminating the complexities typically associated with setting up ML environments from scratch.

- Ori ML VMs: This service offers virtual machines pre-installed with a comprehensive suite of software tailored for ML needs. It allows for the immediate deployment of ML models and accelerates the development process. The key benefits include:

- Ready-to-Use Environment: Quick setup with pre-installed ML frameworks and tools, saving time and resources on environment configuration.

- Scalability and Flexibility: Easily scale resources up or down based on your workload requirements.

- Enhanced Performance: Optimized for ML tasks, ensuring efficient use of GPU resources for faster model training and processing.

- Security and Reliability: Robust security features and reliable infrastructure for secure and uninterrupted ML operations.

- Ori Kubeflow: Combines the scalability of Kubernetes and accessibility of Kubeflow, providing an integrated platform for managing ML workflows. Benefits include:

- Streamlined Workflow Management: Simplify the deployment, orchestration, and scaling of ML models in Kubernetes environments.

- End-to-End ML Solution: From data preparation to model serving, offering a comprehensive toolkit for all stages of the ML lifecycle.

- Flexibility and Portability: Develop and deploy models across various environments, supporting a wide range of ML frameworks and tools.

- Collaborative and Efficient Development: Facilitate collaboration among teams and improve the efficiency of ML projects.

- Ori ML VMs: This service offers virtual machines pre-installed with a comprehensive suite of software tailored for ML needs. It allows for the immediate deployment of ML models and accelerates the development process. The key benefits include:

Capabilities - How Ori helps your AI/ML projects

OGC's cloud infrastructure supports a broad range of ML and AI capabilities:

Data Processing and Analytics: Efficiently process and analyze large datasets, crucial for the initial stages of the ML lifecycle.

Model Development and Training: High-performance GPUs for developing and training complex ML models, reducing time-to-insight.

Automated Machine Learning (AutoML): Simplify model development with minimal coding, enabling creation of high-quality models with less expertise.

Model Optimization and Tuning: Tools and services for fine-tuning ML models to ensure peak performance and accuracy.

Inference: Deploy models in an environment optimized for high-speed inference, enabling real-time analytics and rapid decision-making.

ML Operations (MLOps): Comprehensive support for the ML lifecycle, including model testing, deployment, and monitoring.

Collaborative ML Development: Tools supporting shared workspaces, version control, and integrated development environments (IDEs) to facilitate effective collaboration.

Join Ori Global Cloud

You can join Ori Global Cloud (OGC) for free. You will be able to experiment and explore all the capabilities that OGC provides, with full access to all features so you can start accessing all our services.

Follow these simple steps to get started with OGC:

- Create an Ori Global Cloud account by visiting this link.

- Fill in the required fields and Submit.

- If you signed up with Social Sign-on, you are in, just provide your name and your organisation's name.

- If you signed up with your email address, you will receive an email message to verify your email. Click on “Confirm email”. Any issues? Get in touch via our Support.

- Log-in, explore and enjoy!

Although you can explore the platform for free, to use its full capabilities such as spinning GPU infrastructure you will need to subscribe to a payment plan and provide your payment details.

Learn More Here

- 🚩 Quick Start Guide: Quick step-by-step guide to using OGC;

- 📝 Basic Concepts: Provides a high-level view of OGC's architecture and concepts;

- 🛠️ How To Guides: Quick step-by-step guide to using OGC;

- 🧭 Documentation: Detailed Documentation and User guide to OGC;

- 🗺️ Tutorials: Examples of deploying Application services with OGC;

- ⌨️ Commands (CLI): Using the OGC Command Line Interface (CLI);

- 💵 Billing & Payments: How Billing and Payments work;

- 📑 Billing Agreement: Ori Billing Agreement terms and conditions;

- 🙋♀️ Support: Get help and Frequently Asked Questions.