Ori ML Inference Orchestartion

Introduction to Ori Inference Orchestration

Overview

Welcome to the Ori Inference Orchestration documentation. This capability is at the heart of Ori Global Cloud's mission to streamline the Machine Learning Operations (MLOps) lifecycle for ML practitioners and organizations. Ori Inference Orchestration empowers users to deploy, scale, and maintain their inference workloads across multiple cloud environments with ease and security. It is specifically designed to minimize effort and complexity, thereby simplifying the transition from MLOps testing to production deployment.

Key Features

- Multi-Cloud Deployment: Ori Inference Orchestration allows users to distribute and manage inference workloads across various cloud platforms, providing the flexibility to choose the best environment for their specific needs.

- Seamless Scaling: The orchestration tool dynamically scales resources to meet the demands of your inference workloads, ensuring that your applications are always responsive and efficient.

- Sustained Uptime: High availability is a cornerstone of Ori Inference Orchestration. It ensures that your inference services remain operational, with minimal downtime and consistent performance.

- Secure Operations: Security is built into every layer of the orchestration process, from data transit to execution, safeguarding your sensitive ML models and datasets.

- Effortless MLOps: By abstracting the complexities involved in deployment and scaling, Ori Inference Orchestration makes it easier for teams to focus on refining their models and algorithms rather than on the operational aspects of MLOps.

Simplifying MLOps

Ori Inference Orchestration is not just about running ML models; it's about creating a seamless MLOps experience. From the moment you're ready to test your models, to the point they're deployed into production, every step is designed to be as effortless as possible:

- Testing to Production: Transition smoothly from testing environments to production with orchestrated workflows that mirror your operational needs.

- Continuous Monitoring and Optimization: The orchestration capability includes monitoring tools to keep track of performance, along with optimization features that ensure your workloads are running as efficiently as possible.

- Collaborative Environment: MLOps is a team sport. Ori Inference Orchestration facilitates collaboration across teams, enabling multiple stakeholders to manage and monitor inference workloads in unison.

Getting Started

To begin leveraging the power of Ori Inference Orchestration:

- Set Up Your Environment: Configure your ML models within the Ori Global Cloud platform to be ready for deployment.

- Deploy Your Models: Utilize the orchestration tools to deploy your inference models to the desired cloud environment.

- Monitor and Scale: Keep an eye on performance metrics and scale your resources as needed, directly through the platform's intuitive interface.

Ori Inference Orchestration is your partner in deploying robust, scalable, and secure ML inference workloads. It's time to simplify your MLOps and accelerate your journey from concept to production with Ori Global Cloud.

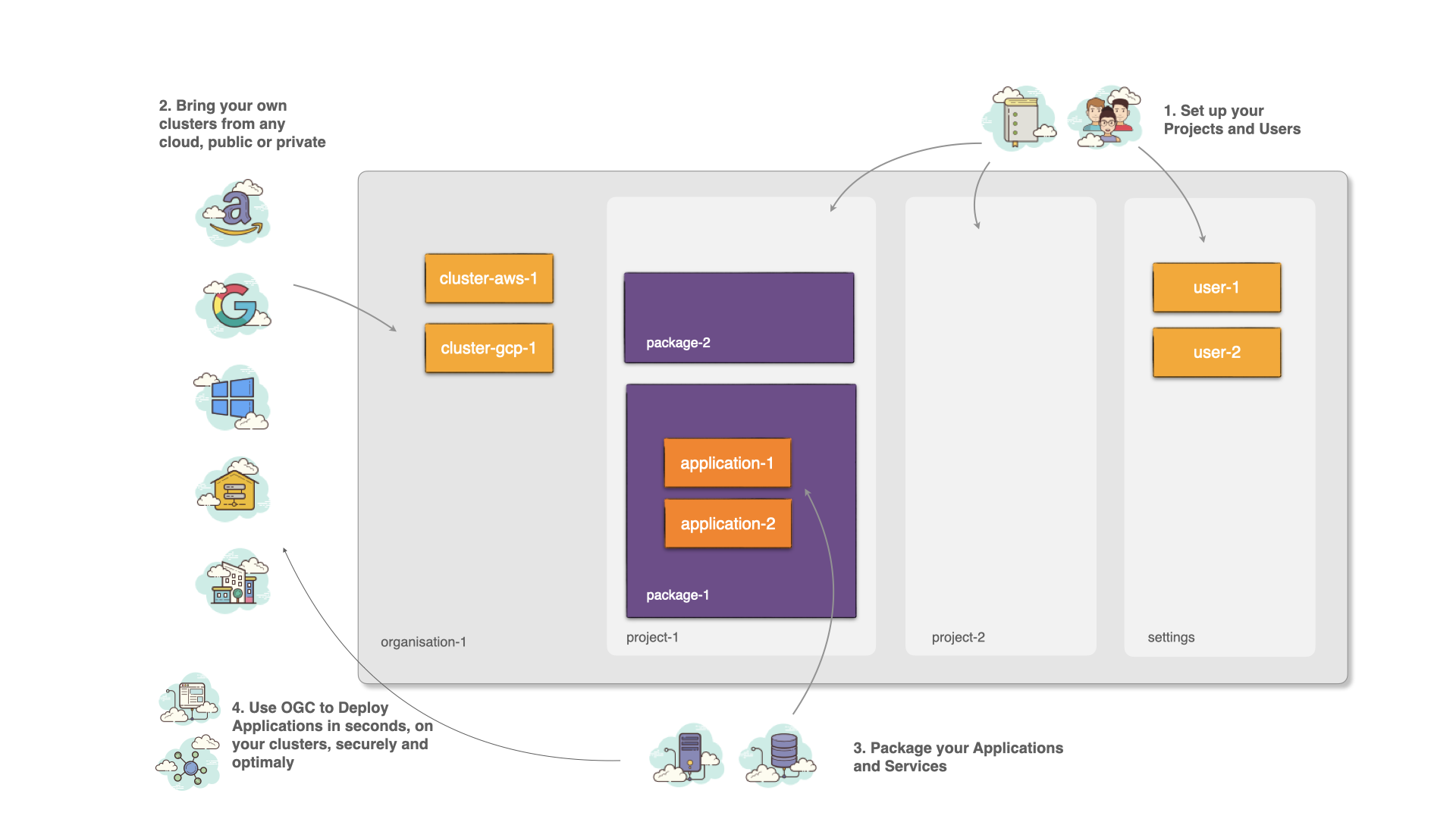

The core components of Ori Inference Orchestration are:

- Organisations provide tenancy with strong separation of resources on the Platform. Projects enable Organisation owners to separate their different resources per teams, with their own secrets, registries and packages. The OGC Free Tier provides support for a single Project, to make it simpler to work with OGC, as you start to use it.

- Clusters are automatically and securely attached to an Organisation via BYOC functionality.

- Packages enable Applications Services to be configured for Multi-cloud deployment within OGC, specifying policies, network routing, application container images and many other configuration items.

- Packages can be deployed across multiple clusters and clouds all at once, and OGC configures secure application to application connectivity across the multi-cloud infrastructure.

Sections

- Clusters: Onboard K8s clusters from any private or public cloud;

- Projects: Manage resources and deploy on Clusters either on a exclusive or on a shared basis;

- Packages: Describe your Application Services and use policies to capture business, infrastructure and networking requirements;

- Deployments: Run your Application Services in the Project Clusters available that match your policies.